For a while, I have suspected that I have a simpler way of creating entity networks. In my book, I show how Part-of-Speech Tagging (POS-tagging) and Named Entity Recognition (NER) are both useful in creating entity network graphs. To save everyone a lot of time, here are some considerations:

POS-tagging: this allows you to identify the part of speech any word belongs to. In building entity networks, I am looking for NNPs, but if I wanted to build a knowledge graph, I’d add complexity. Read more here.

NER: this only extracts entities, but there are many types of entities that can be extracted. I primarily extract people, places, and organizations, but there are more types that can be used. This is a good book about spaCy.

My book covers this in detail. Please read it to learn more. I show how to create these entity graphs.

Yesterday, I decided to revisit Alice in Wonderland and see if my idea could either:

More easily create the network

More accurately create the network

Do both

My gut feeling is that this new approach is doing both. Graph construction and cleanup both took fewer steps.

How Does It Work?

In my book, I show my previous approach. Basically, the difference is that I include a simple loop to wire up the entities, and in the new approach, I let bipartite projection do the work for me. It’s easier and faster.

You can see today’s new code implementation here. You can modify it to use any text.

I used the entity extraction code from my book and made one small cosmetic improvement. I added in a ‘tqdm’ status bar, so that we can see how far along in the process the NER step is, as that takes some time.

Notice that it took 47 seconds to read Alice in Wonderland and extract the entities from each sentence.

NER is not exact. It falses occasionally, both positively and negatively. If it doesn’t recognize a name as a name, it won’t catch it. Other times, it’ll trip up on capitalization and consider a non-entity as an entity. But the code shows how to quickly remove junk. In the above screenshot, Longitude, Antipathies, and Ma’am shouldn’t have been extracted as entities. As NER models improve, this problem should decrease over time. I am using spaCy for NER.

The next thing I do is convert this entity list into a Pandas DataFrame comprised of columns for the entity and the sentence it belongs to. It might be better for me to call this as ‘row’ rather than sentence, but it is what it is.

With that, we have everything we need to create a Bipartite Graph of entities and the sentences they belong to.

I showed how to do this a few times already, actually. My Eureka moment that this might be better than my old approach was when I used it on the Titanic dataset. I was shocked by how well it was able to network families together.

After doing that, I did not push the idea further. I was just happy with the results, but I already had a hunch that this could replace my former method.

I then used it with the arXiv networks, as a bipartite graph could be created using authors and research papers.

Yesterday, I tried this bipartite projection approach to Alice in Wonderland, to see if it’d create as good or better of a network as the one in my book. Turns out, it did, and much more easily. After some validation, I expect that this will become my primary approach for creating entity networks from text.

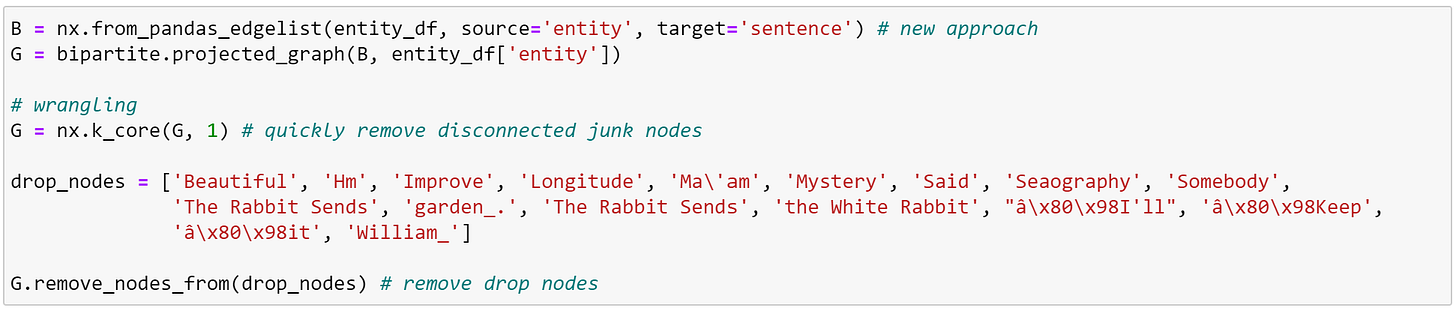

This is the code for this new approach. Bipartite projection is wiring up the nodes, where I previously wrote a loop to do it for me. I’ll explain the code:

I use the entity_df as input data, as the edgelist. I call this B as it is a bipartite graph.

I then do what is called bipartite projection, to convert this into a entity network graph rather than a bipartite graph. Read more here.

I drop all the isolate nodes, as most isolate nodes were false-positives from NER. There was no connection from them to other characters. It would be better to skip this step and manually add junk nodes to the drop_nodes list, but this saves time and can be helpful.

I then (in another cell) investigated the remaining node names and added all found junk nodes to the drop_nodes list, and then removed them, removing the rest of the junk.

Two steps for construction, two steps for cleanup. Super simple. How does it look?

I think this looks great. The largest cluster is the social network of the book of Alice in Wonderland. Outside of this core cluster, notice that some of the ‘places’ entities are linked together, due to being written about in the story.

This is very clean already. It never felt this easy when I first figured this out using POS-tagging, nor when I figured it out for my book using NER. It’s less work for construction, and less work for cleaning, which means more time for exploration, fun, and finding insights.

Network Science in Wonderland

We are using a combination of software engineering, data science, network science, and social science to analyze the social networks found in TEXT that was written in 1865. This does not replace reading, nor does it eliminate the value or importance of reading. This gives us new ways of learning, and new ways of interacting with literature. It is a learning tool.

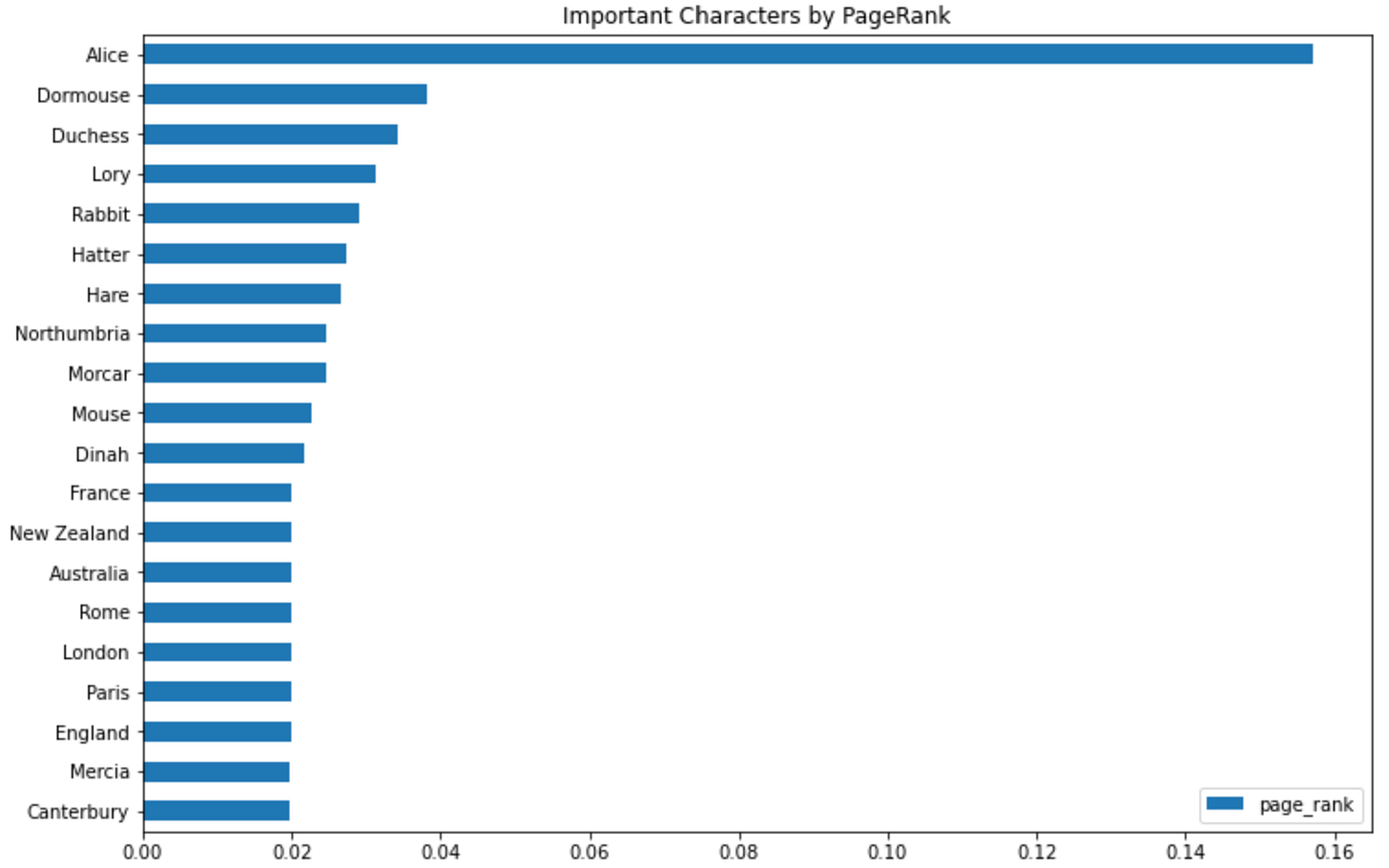

As the network has been built, we can use Page Rank to determine important characters.

This is a bar chart of the Page Rank values of each character. Alice has a much higher Page Rank value, and that makes sense as she is the main character. She is connected to many of the characters. Dormouse is the second most important character in Alice in Wonderland, based on network characteristics.

We can look at the ego networks of the most important characters.

Here is Alice’s ego network.

Here is Alice’s ego network with the center (her own node) dropped. When I have a complex ego network, I often drop the center to be able to see the groups that exist in the ego network. After dropping Alice, I can see that there are two larger cliques or groups present (Mary Ann’s and Cat’s).

Other ego networks can be investigated as well. Run my code and explore!

Neat Finding

While doing bipartite projection, I had an idea. What if I were to project on the sentence number rather than on the character? How would that look?

Now this… this is cool. It basically converted the entity network into a “connect the dots” drawing, and shows the steps for how the characters were wired together. I need to think about this more. I bet that this has uses that I haven’t imagined, yet.

That’s All, Folks!

That’s all for today! Thanks for reading! If you would like to learn more about networks and network analysis, please buy a copy of my book!