I’ve been wanting to write this blog post for a while now. I’ve been brainstorming what I wanted to do for weeks, but this is a bit more complicated than whole network analysis, or egocentric network analysis. Yesterday, I figured out how I want to do today’s implementation.

It is important to understand that this is just one implementation. Python dictionaries are very useful for holding temporal networks an context about them, and additional context can be added on in steps. I’m going to show how to do that today.

What is Temporal Network Analysis?

This is not covered in the first edition of my book, other than maybe in passing. I will include a chapter in the second edition of my book to discuss Temporal Network Analysis, possibly using parts of today’s post.

Networks evolve over time. Think about your social media accounts. Every day, your followers increase, but your followers can also decrease. Every day you also follow more people, but you probably unfollow people, too.

Also, these days, what I call “artificial influence” has run rampant. Bots are out of control and social media has never really taken steps to mitigate, just hand wave. Artificial influence is also done on the internet itself, not just social media.

With temporal network analysis, we can analyze how networks change over time. I think of this as network evolution. Today, I’m going to show you ONE implementation of how this can be done, but there are others, and I’ll be thinking about this topic more and more during this iteration of #100daysofnetworks.

Please follow along with the code. This is a bit complicated.

Build the Origin Graph

If you look at the code, you can see that this time I am using a dataset called ‘wilco_content_edgelist.csv’. This network was build using Named Entity Recognition (NER) across several pages related to the band Wilco. This network was built using TEXT as input, not direct links. It is a cool approach for building networks, mentioned in my book, and written about by others as well.

You can learn how I built it by reading day 10, or by looking at the code from day 10.

I’m using the whole network as the origin graph, the starting point.

Dropping Connected Components

For this experiment, I am not dropping single nodes or random edges. I am dropping whole connected components, to simulate what would happen if a whole community walked away at once, or if a whole community joined at once.

Why would that ever happen?

Brands are sometimes boycotted. This year, a brand of beer was boycotted, and many people stopped drinking that brand of beer. If we had their social media data and could construct a graph, we could see entire groups walking away. We could potentially identify who was influential in the boycott.

Celebrities are also boycotted or ‘cancelled’ as they say. People gradually lose interest in politicians, too.

So, my idea for today is bigger than just dropping random nodes and edges. I want to see if I can identify whole communities leaving a network, or being added to a network.

The first thing I need to do for this simulation is identify the largest connected components, because they will be useful in removing and adding nodes in batches.

The code for that is pretty simple. Connected component index 0 contains 755 nodes, index 14 contains 14, index 55 contains 7, and index 57 contains 6. Perfect. We’ll do three rounds or removing whole components. The goal is to be able to easily detect the changes, and with more context than the node level.

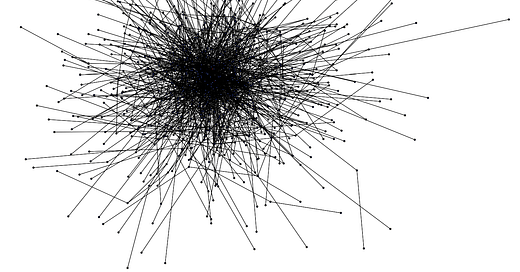

The largest component (index 0) is where most of the node connectivity is taking place.

And if I look at another component, it’ll be much smaller and more readable.

This is going to be one component that we remove and try to detect its removal.

Creating “Time Slices” of a Network

My Eureka moment for temporal network analysis happened when I figured out how to chop networks up into what I call time slices. I watched a documentary once that described space and time like a loaf of bread and that stuck with me over the years, and it makes sense programmatically, helping me wrap my mind around this to do this. I hope it helps you, too.

The first thing I need to do is create some artificial time slices, removing entire connected components while I do.

Usually, a NetworkX graph is called G, but I am making a move away from that and using a Python dictionary for G, so that I can have time slices in G.

Note that as I increment G, I am also dropping an entire connected component. You can see that in ‘drop_nodes’. I remove the nodes on the bottom lines. As I am using connected components, these nodes are not attached to any other part of the network.

Proof of Concept

Next, in the code, I build a simple proof of concept, comparing G[1] to G[0], to see if I can find the differences.

Using that, I can see which edges were dropped or added, which nodes were dropped or added, I can create a graph of what was dropped so that it can be visually inspected, I can create a graph of what was added for the same reason, and I can add on as many metrics as I want to understand what impact these changes have made on the overall network. Notice that I am looking for changes in density, total degrees, and total edges. Please understand that you can add as many useful metrics as you want or need. Get creative.

For reference, this is an image of what was dropped. It is an image of what is no longer in the network, and it has retained the relationships. Imaging a case where a whole community of people left a brand, that central node might be of interest. In this network, these nodes are not people, but imagine people or accounts. The point is that we can see what is no longer in the network. We can see what is gone.

To me, that is very, very, very cool and probably important. Think about use-cases.

Let’s Automate This

I want this to be something reusable that I can use in #100daysofnetworks and any network analysis that I do, so I created a useful function. Please see the code. Running it is simple:

I pass in the temporal graph, and the function adds enrichment. For the first step in the temporal graph, the output looks like this:

It contains the time slice of the graph itself in ‘graph’, and has recorded the original density, degrees, and edges as values for comparison. What’s the next one look like?

I can see that since G[0], there have been some changes. Under ‘dropped_edges’, I can see specifically what edges have been removed. Under ‘dropped_nodes’, I can see specifically what nodes have been removed. I can also see that no edges or nodes have been added. I can see that overall density of the graph actually increased by a little, even though there has been a drop in degrees and edges. Many other metrics could have been added and compared, easily.

And if I visualize ‘graph_dropped’, I can actually see what was removed from the network.

This is better than just a list of nodes. We can see the shape those nodes used to have in the network. We can see that it was a community that dropped. By looking at other slices, I can see the next round of changes.

Finally, since G is an enriched dictionary, we can investigate the bottom three fields over time.

I can see that there was a bigger initial change to density, and that subsequent changes were smaller. Removing connected components actually increased overall network density. Why is that?

I can also see that there was a bigger initial change to degrees, which shows in our work. The values are negative, but it would appear that the drop is slowing. However, this is just an example, and it doesn’t really say anything. This is a HOW post.

What Does it Mean?

What do you think this can be useful in? I mentioned influence, but there are other uses. How would you use this? What metrics would you include to better understand network changes over time? At this point, this is where Network Analysis meets Time Series Analysis. Enjoy!

That’s All, Folks!

That’s all for today! Thanks for reading! If you would like to learn more about networks and network analysis, please buy a copy of my book!

This is such interesting work. I don’t feel like I have an intelligent comment to add but wanted you to know I’m reading and enjoying.