If you would like to learn more about networks and network analysis, please buy a copy of my book!

Order From Chaos!

In Day 5 of 100daysofnetworks, I created a Wikipedia crawler that could be used to investigate any topic that is of interest to you. You should really use it. It is a lot of fun to use and creates useful network data.

What do I mean by useful?

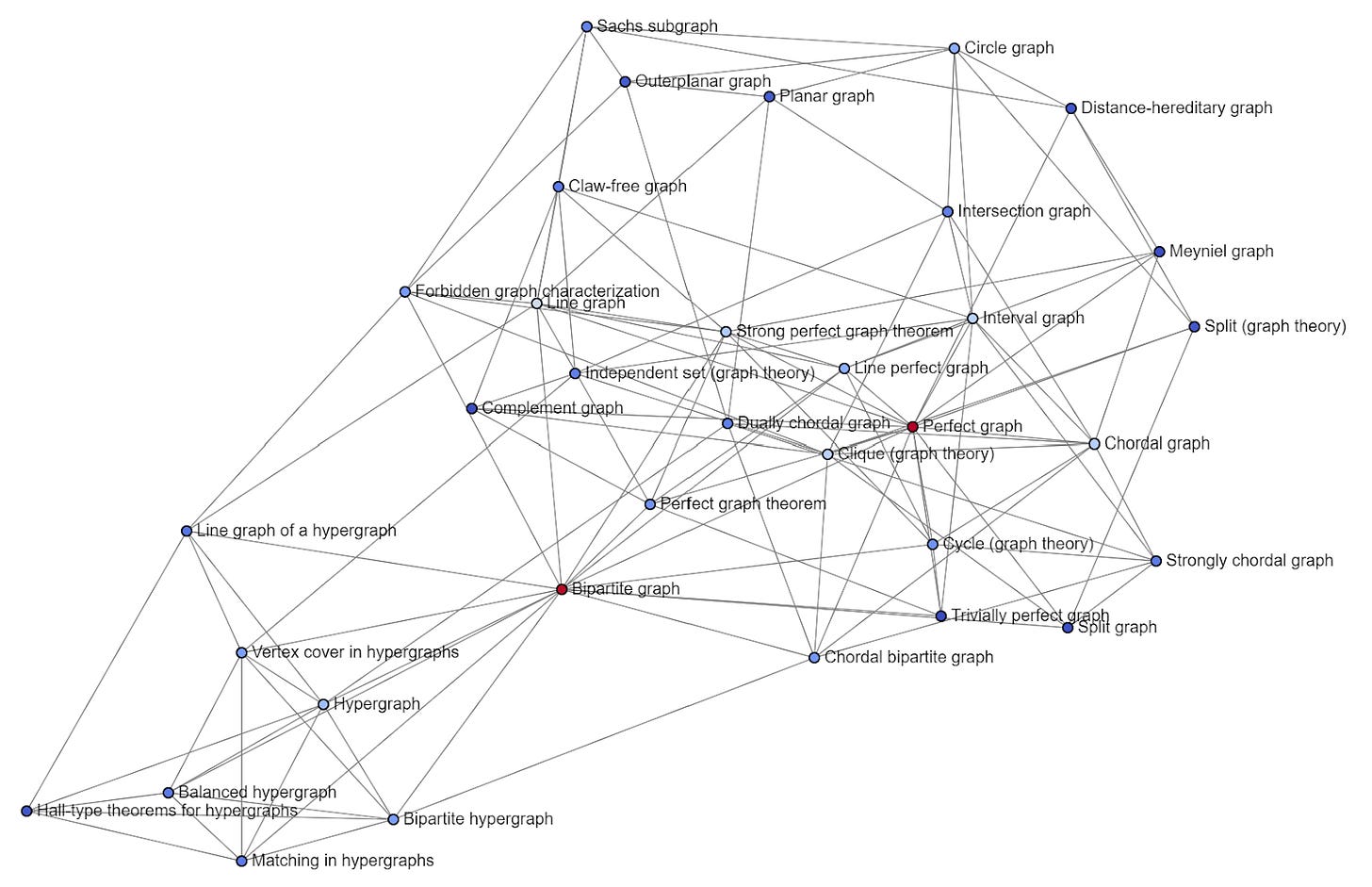

Well, today, I'm going to literally convert a graph into a learning curriculum for myself. I am going to take this:

And turn it into this:

In other words, I am going to turn a complex network into a clean learning curriculum that I can use to learn more about Network Science. I will use this to learn new things, and I will explore them during this series.

Network Spot Checks

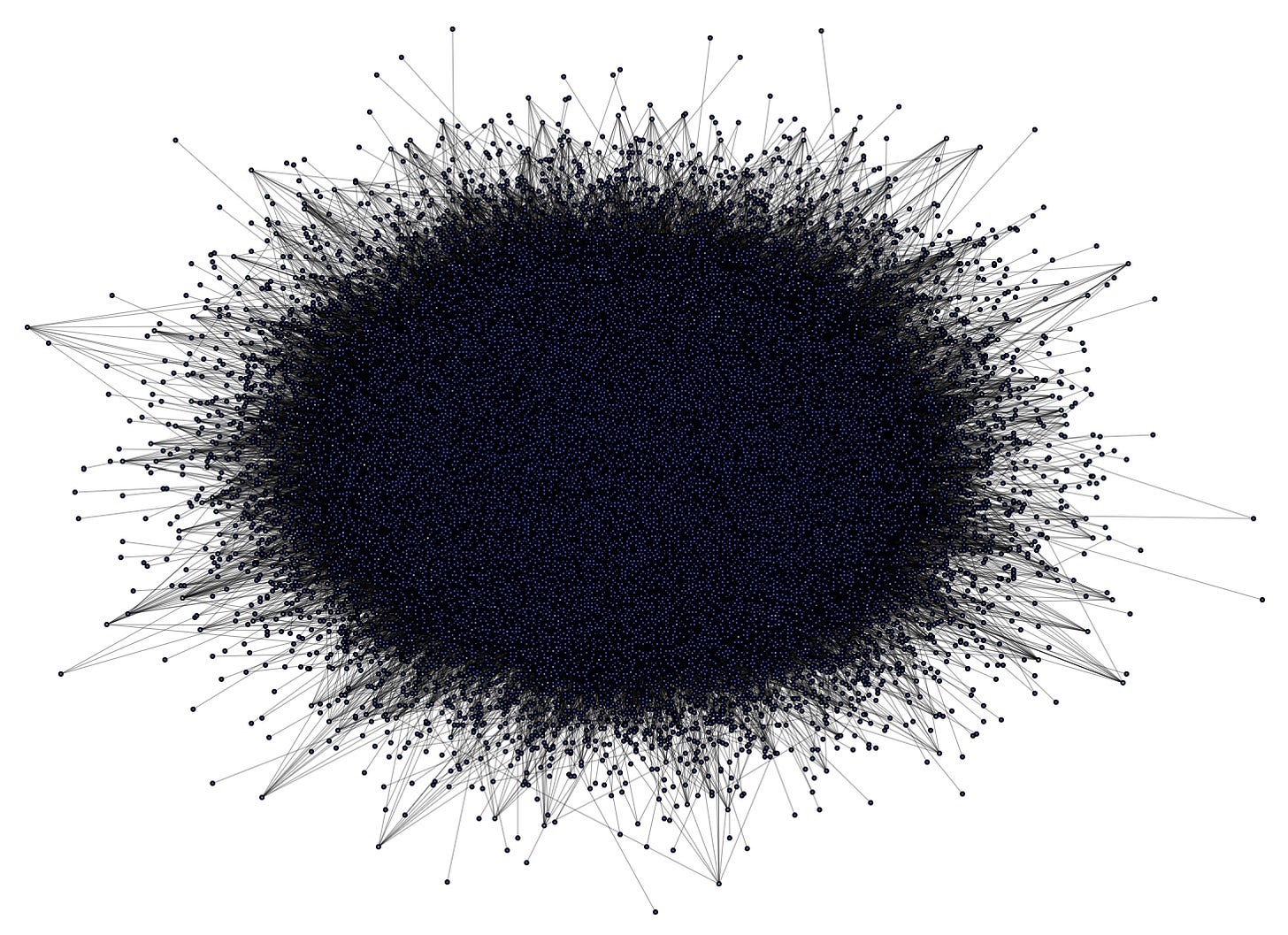

True story. When I started working with networks, I got a lot of push back for using them as people could not understand how they could be useful. There is too much information in one visualization, and the more complex the network, the more difficult it is to visualize. Look at this mess (scroll up). How are we supposed to pull anything useful out of that ball of yarn?

It is not difficult if you know how. My book shows how, and this series has shown how as well. In the previous days, I've already shown a bit of how we can zoom in on parts of networks. For today's work, we are going to be zooming in on communities, but our final approach involves essentially "peeling the onion" layer by layer. I will show how. This is a cool technique, and not shown often. I will get to that after the Community Detection Work.

Community Detection Approach

One thing that I have found to be very useful is to look at content from a community perspective. Like attracts like. Pages that are related will link to each other, forming clusters, or communities.

You can see today's code here.

Check out the code to learn how to do the Community Detection work. I'm only going to write about what I see in blog posts, not describe code.

This is the CORE of the largest community in the network. Pay attention to the k_core piece of code under the largest community and you will see that I am showing only nodes that have five or more edges. I only do that for this largest community. The other communities, I show completely as they are much smaller. Looking closer at this, I see many mentions of the word "graph". We are in the right place.

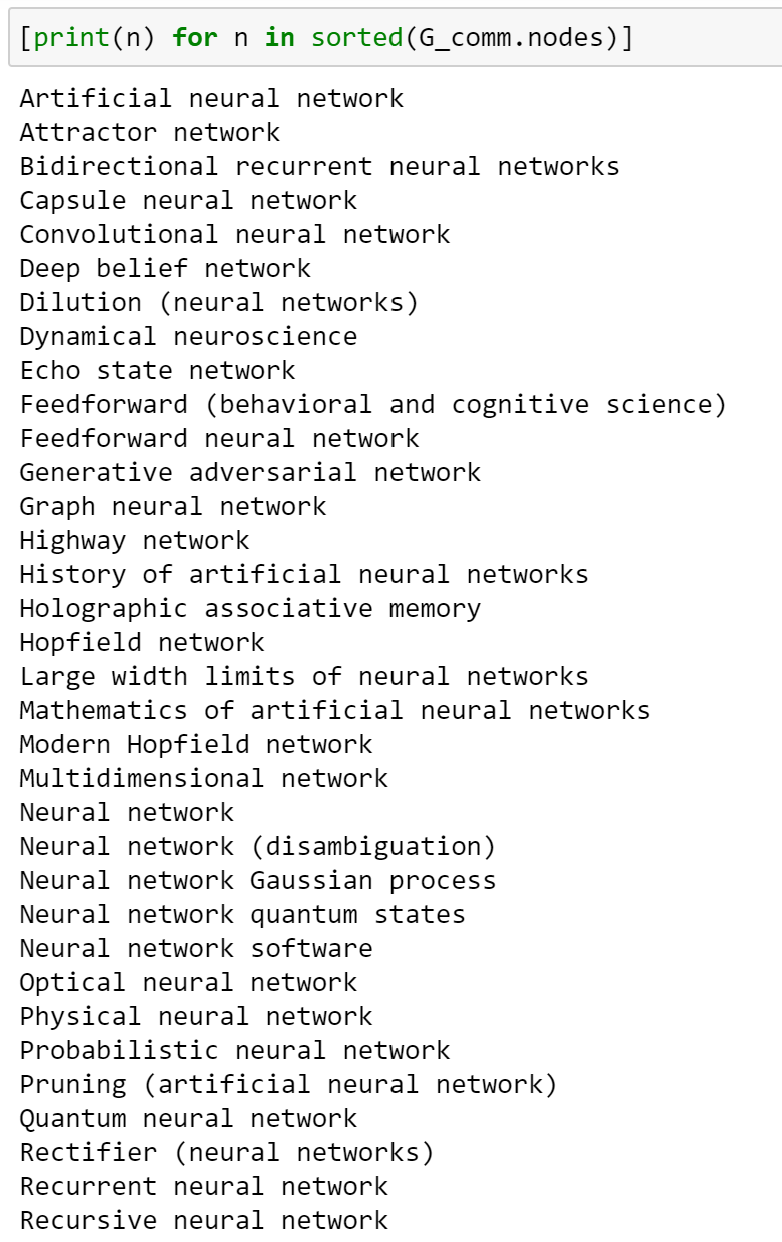

What other pages are part of this community? Let's see a list of only pages that are in this community.

Super easy. just like that, we can see the nodes that are part of any community, and in this case, this community is Wikipedia pages related to Network Science and Social Network Analysis. This is a clean list of relevant pages.

Here is another community:

Look closely! That is a computer science and computer graphics community. That community could actually be split into two communities, and there are only two bridge nodes holding the two communities together. See if you can identify them.

Let's look at another:

Look closely! This is a community that is related to Artificial Neural Networks. This would actually be a cool one to study, if interested in Machine Learning. What are some of the relevant nodes/pages?

This would be a pretty cool starting point for learning about Neural Networks. Let's look at another network.

That's really cool. It's a network of different sciences and how they relate to each other. This network would be interesting to use as seed nodes, and then redo crawling, to identify how various sciences are related. It is a small community, and incomplete, but it wasn't intentional for this to end up in the data, and that is very cool that it happened! One more!

This is a communication network network! This is related to network science, in that these can be analyzed with network science.

There are many more communities in the data, with a network this large. I encourage you to play with the data and to explore the networks. You will not learn this without practice. With practice, it becomes easy and intuitive.

I have shown that we can look at Wikipedia data not only from a whole network perspective but also from a community perspective, and the community level is a good way to hook onto the signal that you want. That's how I think of it, at least. Networks and data can be used together. They do not need to be separate things. They are powerful when used together.

K_Core and K_Corona

I use two techniques for exploring the layers of a network:

K_core allows me to see the core of any network, which helps understand what is most important or influential to a network. If you give K_core the highest degree value that it can handle, it will show the most connected nodes in a network.

K_corona allows me to PEEL THE ONION. Think of a network as an onion. K_corona allows me to peel the onion, one layer at a time, based on the number of degrees a node has.

Using K_core, I can see that the maximum depth I can go is six edges.

If I go past six, the code will fail. So, six is the number to keep in mind. Now that we have identified this using K_core, we will use the values [6, 5, 4, 3, 2, 1, 0] in K_corona to see all nodes that have exactly six edges, then exactly five edges, then exactly four edges, then exactly three edges, and so on.

Why?

Because I can use this to build a study guide. The most connected nodes are more important than the least connected nodes, in terms of learning. The most connected nodes have to do with other nodes, and understanding how things relate allows us to build a strong foundation. Studying fringe material is less useful, unless you are solving some fringe problem that it relates to. There is a time to focus, and a time to zoom out.

In the Jupyter notebook, I show simple code to convert the main Network Science community into a study guide. Please see that code.

As a result, I now have a clean list of stuff to explore and learn more about.

LISTEN CLOSELY. This can be done for any topic. I made available the Wikipedia crawler that can create the datasets to do this for any topic that you are curious about. For instance, I want to pull datasets about:

ALF - remember that show?

Science Fiction - A science fiction network would be awesome to explore

Work Topics

Companies I want to learn more about, and their relationships

People I want to learn more about, and their relationships

And so on. Network Science is all about RELATIONSHIPS between THINGS. Networks are essentially things and their relationships.

This madness:

Is really just a picture of THINGS and their RELATIONSHIPS with other THINGS. In this case, this is a network of TOPICS and their RELATIONSHIPS with other TOPICS. So, don't be afraid of networks or complexity. You just can't analyze these the same way as you would a spreadsheet or language.

Finally, if you are interested, I threw the study guide into a document and made it available for everyone. You can access it here.

Goal: Show Something Useful

My goal for today's post was to make and show something useful. Unless you have a reason to study networks, there never feels like a reason to study networks. Same goes for any topic. But here is the thing: networks are all around us, and network data is easily accessible, even if you have to generate it yourself. If you are creative, you can use this to your advantage. You don't have to use everything for work.

I have used networks to create vocabulary lists, building a network of Jane Austin's word use, and then extracting nodes with only a single edge (words only used once).

I have used networks to understand the flow of ideas across space and time.

I have used networks to troubleshoot server problems in minutes that used to take days.

I have used networks to study how malware evolves, and to use that to detect undetected malware.

And on and on and on and on and on.

I think in networks, because life is networks. Life is not spreadsheets. Life is not lists. Life is people and things and relationships.

So, I encourage you to learn to study networks, and this is a good place to start, as is my book, and I will recommend many other books during the course of this adventure.

Wikipedia Extras

In the Jupyter Notebook, I also included some code for working with the Wikipedia Python library. I show how to use it to pull summaries and text for any of the nodes in the Graph. I thought that might be useful to spark creativity.

Thank You!

Thank you for following along on this adventure! If you would like to learn more about networks and network analysis, please buy a copy of my book!